Model Context Protocol Bridging the Gap

September 24, 2025

How Model Context Protocol is Bridging the Gap Between AI Agents and Enterprise Systems?

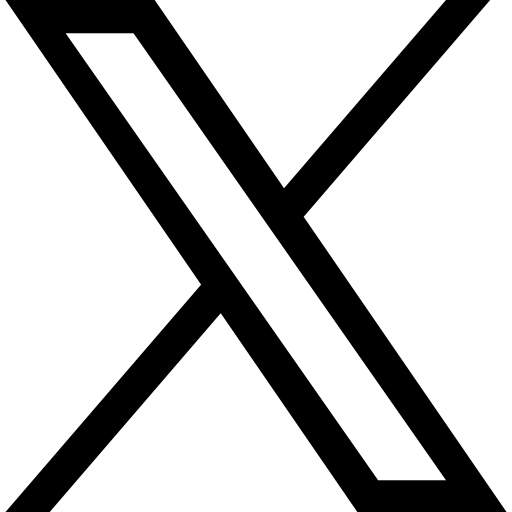

The artificial intelligence landscape has undergone a remarkable transformation over the past few years. What born as an impressive but isolated text generation ability has evolved into intelligent agentic systems capable of learning, reasoning, planning, and acting autonomously within complex enterprise environments. At the heart of this evolution lies a critical breakthrough: the Model Context Protocol (MCP) – a standardized framework that enables AI agents to seamlessly integrate with external tools and data sources.

As an AI researcher who has witnessed this transformation firsthand, I believe MCP represents more than just another protocol specification. It is the foundational infrastructure that will define how agentic AI systems operate in production environments of enterprises for years to come. This blog explores the technical journey from generative AI to agentic systems, examines MCP's architecture and implementation patterns, highlights critical security considerations, and provides a point of view for enterprise adoption.

The Journey from Static Generation to Dynamic Agency

The Foundation: What is Chain-of-Thought Reasoning?

The path to modern agentic AI began with a deceptively simple insight: language models perform significantly better when encouraged to "think step by step." Chain-of-Thought (CoT) prompting, introduced by Google's research team, demonstrated that explicitly asking models to articulate their reasoning process dramatically improved performance on complex tasks requiring multi-step logic.

CoT prompting works by providing examples where the reasoning process is explicitly shown, teaching the LLM to include reasoning steps in its responses. This well-defined approach to thinking mostly results in more accurate and explainable outcomes, particularly for math problems, logical reasoning, and planning tasks.

# Example of Chain-of-Thought prompting pattern

cot_prompt = """

Question: If James has 8 apples and gives away 3 to Mary, then buys 5 more apples,

how many apples does he have?

Think step by step:

1. James starts with 8 apples

2. He gives away 3 apples to Mary: 8 - 3 = 5 apples remaining

3. He then buys 5 more apples: 5 + 5 = 10 apples

4. Therefore, James has 10 apples

Answer: 10 apples

"""

The Breakthrough: What is ReACT Framework?

While CoT enabled better reasoning, it still confined models to purely cognitive tasks. The ReACT framework (Reasoning and Acting) changed this paradigm by introducing an iterative loop that combines reasoning with external action.

ReACT operates through a structured cycle of Thought → Action → Observation, allowing agents to think about what to do next, execute actions using external tools, observe the results, and incorporate those findings into subsequent reasoning steps. This pattern mirrors human problem-solving approach and significantly expands what AI systems can accomplish.

# ReACT pattern implementation example

class ReACTAgent:

def __init__(self, tools):

self.tools = tools

self.conversation_history = []

async def execute_task(self, user_query):

max_iterations = 5

for i in range(max_iterations):

# THOUGHT: Reasoning step

thought = await self.think(user_query, self.conversation_history)

self.conversation_history.append(f"Thought: {thought}")

# ACTION: Tool selection and execution

if self.should_use_tool(thought):

action = await self.select_action(thought)

self.conversation_history.append(f"Action: {action}")

# OBSERVATION: Tool result integration

observation = await self.execute_tool(action)

self.conversation_history.append(f"Observation: {observation}")

# Check if task is complete

if self.is_complete(observation, user_query):

break

else:

# Final answer without tool use

final_answer = await self.generate_final_answer()

return final_answer

return await self.synthesize_response()

The Evolution: How tools come together with LLMs to make AI Agents possible?

The combination of CoT reasoning and ReACT's action capabilities laid the groundwork for agentic AI – systems that can operate with greater independence, long-term planning, and collaborative capabilities. Unlike traditional AI that follows predefined rules or requires constant human intervention, agentic AI systems can adapt to changing conditions and operate for extended periods with minimal oversight.

This progress has been driven by several key factors:

- Enhanced reasoning capabilities through advanced prompting techniques

- Tool integration frameworks that allow AI to interact with external systems

- Multi-agent collaboration patterns that enable AI systems to collaborate

- Persistent memory and context management for preserving state across interactions

However, as these systems became more complex, a critical challenge emerged: the lack of standardization in how AI agents connect to external tools and sources of data.

Understanding the Model Context Protocol

What is the Need for Standardization in tool integration for AI Agents?

Before MCP, every AI application required custom integration for each data source or tool it needed to access. This created what Anthropic describes as an "N×M integration problem" – where N different LLMs needed M different custom connectors to interact with various systems. The result was fragmented, difficult-to-maintain integrations that didn't scale effectively.

MCP tackles this challenge by allowing a universal, open protocol that enables developer community to build secure, two-way interactions between their data sources and AI/Language Model based tools. A popular analogy for MCP is that of a "USB-C port for AI applications" – similar to how USB-C provides a standardized way to connect devices to various peripherals, MCP provides a standardized way to connect AI models to different data sources, tools and technologies.

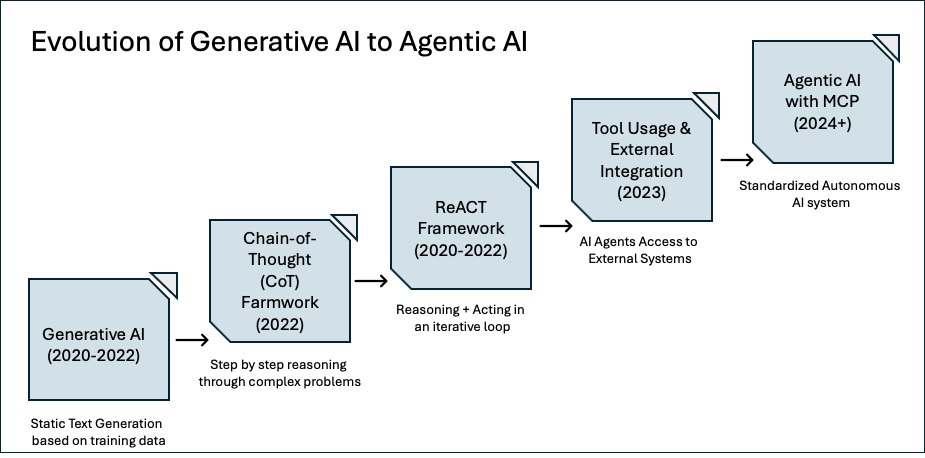

MCP Architecture: How MCP enables standardized communication between AI models and external tools and data sources?

Following Diagram depicts the components of typical MCP setup and what each components does to provide the ability for the agents to scale.

The Model Context Protocol follows a clean client-server architecture with three core components working in concert:

MCP Host

The MCP Host serves as the AI-powered application or agent environment (such as Claude Desktop, an IDE plugin, or any custom LLM-based application). This is what end-users interact with, and it maintains the capability to connect to multiple MCP servers simultaneously.

MCP Client

The MCP Client acts as an intermediary that the host uses to manage server connections. Each MCP client handles communication to one MCP server, maintaining them in sandboxed isolation for security purposes. The host spawns a client for each server it needs to use, ensuring a one-to-one relationship.

MCP Server

The MCP Server is a program that implements the MCP standard and provides specific capabilities – typically a collection of tools, access to data resources, and predefined prompts related to specific to a domain. These servers communicate with databases, APIs, file systems, or any external source of data.

What are the core component of MCP based communication?

MCP defines three core components that govern interactions between clients and servers:

- Tools: Functions that the LLM can invoke to perform actions or retrieve information

- Resources: Data sources that provide context to the LLM (files, traditional database records, API responses like JSON, XML)

- Prompts: Pre-defined prompt templates that can be customized with parameters

What security mechanism comes built in with MCP?

MCP's architecture incorporates security considerations from the ground up. The protocol places emphasis on keeping AI access controlled through several key mechanisms:

- Explicit Permissions: Each MCP server requires explicit user approval before connection

- Sandboxed Execution: Clients maintain isolated connections to prevent cross-contamination

- Privilege Control: Tools run with specifically granted privileges rather than system-wide access

- Local-First Deployment: Initial focus on local deployments for enhanced security control

What are most popular MCP Servers ?

The MCP ecosystem has experienced explosive growth, with over 100+ servers now available across various categories. Here are some of the most trusted and widely-adopted implementations:

Official and Enterprise-Grade Servers

GitHub MCP Server

The official GitHub MCP server provides comprehensive integration with GitHub's REST API, enabling AI agents to read issues, manage pull requests, trigger CI workflows, and perform repository management tasks. With over 2.8k GitHub stars, it's considered the "gold standard" for building secure, API-aware agents.

Key capabilities:

- Repository management and code operations

- Issue and pull request automation

- CI/CD pipeline integration

- Code scanning and security features

Database Connectors

- PostgreSQL MCP: Enables natural language database interactions

- SQLite MCP: Lightweight database integration for local development

- MongoDB MCP: NoSQL database connectivity for modern applications

Development and Creative Tools

Playwright MCP

Provides web automation capabilities, enabling AI agents to interact with web applications for testing, scraping, and workflow automation.

Future Outlook and Industry Trends

The MCP landscape is rapidly evolving, with several key trends shaping its future:

Standardization and Governance

Industry experts predict that MCP will become a widely adopted standard, with major cloud providers like AWS and Microsoft Azure investing heavily in MCP-based solutions. The protocol is expected to work with standardization bodies to advance formal standardization processes.

Enterprise Adoption Growth

Statistical projections indicate 25% growth in MCP adoption and 30% growth in LLM integration with enterprise data sources over the next year. This growth is driven by increasing demand for context-aware AI applications and the need for standardized integration protocols.

Advanced Security Framework Evolution

The security landscape is maturing rapidly, with comprehensive frameworks based on defense-in-depth and Zero Trust principles specifically tailored for MCP deployments. Organizations are implementing sophisticated threat detection, compliance reporting, and governance controls.

Remote and Cloud Integration

The roadmap includes enhanced support for remote servers, improved authentication schemes, and better distribution mechanisms. These improvements will significantly lower the usage barrier and enable broader enterprise adoption.

Conclusion: Preparing for the Agentic Future

The Model Context Protocol represents a fundamental shift in how we architect AI systems for enterprise environments. As we've explored, MCP solves critical standardization challenges while introducing new security considerations that demand careful attention.

For enterprise AI architects, the key takeaways are clear:

- MCP is inevitable: The standardization benefits are widespread and substantial, and industry momentum is building rapidly. Shopify recently launched their storefront MCP which is getting very good traction. Stripe, a popular payment processing platform has made agentic toolkit available

- Security must be paramount: Early implementation of comprehensive security controls is essential for enterprise success

- Phased adoption works: Start with controlled deployments and expand based on lessons learned

- Community engagement matters: Active participation in the evolving standards and security frameworks benefits everyone

The journey from static generative AI to dynamic agentic systems powered by standardized protocols like MCP represents one of the most significant architectural shifts in modern AI development. Organizations that master these concepts now will be best positioned to leverage the full potential of autonomous AI systems in the years ahead.

As we continue to push the boundaries of what's possible with agentic AI, the Model Context Protocol will undoubtedly play a crucial role in enabling secure, scalable, and interoperable AI systems across enterprise environments. The question is not whether to adopt MCP, but how quickly and securely organizations can integrate it into their AI infrastructure. In my next blog, I’ll be diving into the security challenges, potential vulnerabilities, and best practices around MCP—an area that’s becoming increasingly important in today’s landscape.